Digital technologies are changing the landscape of healthcare service delivery and raising patient expectations on where, when and how they engage with providers – and payers. Leading organizations are responding to these challenges and opportunities by implementing patient-centric communications and analytical tools and changing how they deliver core services – transforming their business models, operations and the patient experience in the process. To understand the legitimate potential offered by these tools, we need to unpack the buzzwords and examine the benefits and risks of specific digital capabilities – and then consider what they enable in a healthcare service delivery setting.

Digital technologies are changing the landscape of healthcare service delivery and raising patient expectations on where, when and how they engage with providers – and payers. Leading organizations are responding to these challenges and opportunities by implementing patient-centric communications and analytical tools and changing how they deliver core services – transforming their business models, operations and the patient experience in the process. To understand the legitimate potential offered by these tools, we need to unpack the buzzwords and examine the benefits and risks of specific digital capabilities – and then consider what they enable in a healthcare service delivery setting.

The following six core principles should be at the heart of every digital transformation initiative, large or small. While we have found these primary drivers to be applicable across various industry settings, here we outline their specific relevance to Healthcare.

1. Business Driven. Many digital technology initiatives in healthcare are driven by one or more core elements of the Triple Aim:

· Improve the health of populations – this principle is driving virtually every organization to identify and track populations of high-risk, over-utilizing patients; establish agreed-upon outcomes goals for defined segments and strata with similar characteristics or needs; and measure the impact of care plans tailored for each individual patient;

· Reduce the per-capita costs of care – value-based reimbursement programs and other risk-based arrangements are focusing attention on both clinical outcomes and financial results – driving the need for self-service analytics for patients, providers and payers – to measure the actual costs of care delivery for each patient;

· Improve the patient’s experience of receiving health care services – increasing transparency and coordinating patient-focused care across an expanding set of partners and providers helps to deliver the right care at the right time in the right setting – increasing patient satisfaction and improving compliance with care plans.

All the above elements are driving the need for better integration of primary service delivery processes and the resulting data streams – motivating an increasing availability of business intelligence (BI) and analytics capabilities and an omni-channel communication platform across the entire enterprise value chain. Digital technologies must be part of every aspect of the overall business-level strategy.

How are you anticipating the needs for and incorporating the capabilities of digital devices and data streams into your business execution and communications strategies?

2. Data is a Core Asset. Organizations that define, measure and adjust their operations using diverse and relevant data sets will realize many performance advantages – to the benefits of all stakeholders.

· Assembling Good Data – capturing enterprise information in digital format – and verifying the quality of those data sets against defined standards for completeness, accuracy and veracity – is an absolute foundation for preparing and enabling digital transformation. The core data systems for the execution of primary transactions and analysis of results must be credible and trustworthy – and this is only achieved – like any relationship – over a period of consistent behavior and positive results.

· Not a Simple Task – for many, this is a major challenge and a significant hurdle to overcome. Most operations are dependent upon data sets that originate in multiple legacy source systems – many of which are too narrowly focused or too closely aligned with aging or inflexible business applications. Understanding the actual contents of these older systems is challenging – envisioning their utility and engineering their transformation for novel purposes represents the “heavy lifting” of data integration. These efforts are difficult to quantify based on a direct ROI – and they are very often on the critical path to deploying and making effective use of newer digital technologies. However, opening these core assets to more transparent use by diverse participants will very often yield unanticipated benefits.

· Incremental Strategy – many organizations will not be able re-architect their data systems from the ground up – in these cases, a more incremental approach is much more viable. Most organizations will begin with a more focused implementation, building the data supply lines to capture and move data from core operational sources into a data warehouse or set of data stores optimized for BI and analytics.

· Managing Data as an Asset – proactive data governance that designates authoritative sources, establishes and enforces quality criteria, defines and assigns roles and responsibilities for managing defined data sets, and facilitates the use of data for various purposes is a critical aspect of any successful implementation.

· Anticipating Scale – the incorporation of so-called “big data” is also growing in importance in healthcare. The volumes, variety and value of these expanding and emerging data sets is driving further elaboration of the data flows, validation criteria, storage approaches and dissemination for novel use cases and analytical applications.

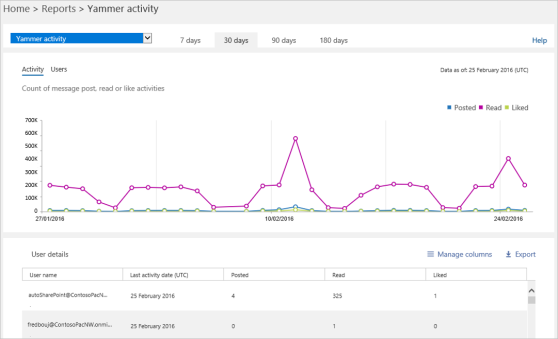

3. Actionable Analytics. Digital interactions – whether improved access to diverse data sources or primary transactions – are most valuable when self-service users can make timely and informed decisions and take appropriate actions based on what the data is indicating.

As the scope, diversity and ubiquity of digital devices continues to grow, the capture and dissemination of data will spread – and more users will be better informed about both the specific details and the broader context of their operating choices.

· Patients can access their care plans – and be completely up to date on their responsibilities for complying with expectations on medications, lab results, diet, exercise, follow-up appointments and monitor their overall progress toward agreed-upon clinical goals;

· Providers can access populations – and can stratify sub-segments of their panels according to clinical risk and compliance – tailoring their communications and interventions to keep patients on-track with their outcomes goals;

· Payers can review patient populations and provider networks – identifying attributed patient groups against value-based performance goals and profile provider effectiveness in meeting clinical and financial goals on risk contracts and alternative payment models.

All these capabilities empower the various user groups to more clearly understand and localize the issues and factors underlying excellent or poor performance – and focus the reinforcing or remedial actions to the benefit of all stakeholders.

4. Patient-Centered Experience. A key driver and a widely recognized benefit of the increasing availability of digital technologies is their ability to both stimulate demand and meet the rising expectations of patients for convenient access to all forms of healthcare information and services through their hand-held or wearable devices.

· Ubiquity – the emergence of the “connected anywhere, information everywhere” operating experience has given patients greater power and influence in engaging and steering their relationship with providers. So-called “activated patients” are more equipped to make informed choices and take the initiative to research their conditions, identify and understand their care alternatives, communicate and coordinate with care providers, exchange stories and find support from other patients in shared-need communities, set agreed-upon goals for their care with their providers, and measure their results.

· Flexibility – providers can no longer hold fast to rigid or single-stream operating models – imposing their internal structures, processes and workflows onto patients from the inside out. For digitally-enabled patients, the care experience is becoming much more of a self-directed journey. Providers who recognize this reorientation to facilitate the “Patient Journey” and unbundle and organize their delivery of services according to this revised model will realize greater patient satisfaction with their own care experiences, better compliance with care plans, and improved outcomes – both clinical and financial.

· Adaptability – similarly, payers are coming under increasing pressure to unbundle and adapt to the disaggregating needs and demands of their patients (members). Patients are seeking customized configurations of benefits packages that are more cost-effective and focused on their specific anticipated needs for services. These trends will continue to play out as more patients enter the individual market for health insurance products and payers are forced to adapt and devise new benefits plans.

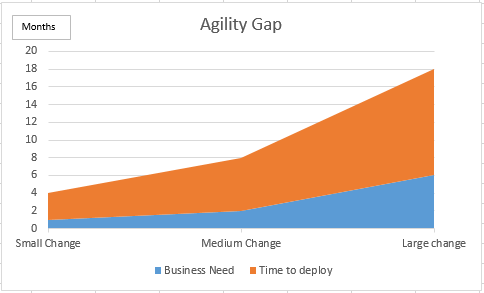

5. Agile Technologies, Agile Processes. Agility must be a core value throughout the transformation effort – it must pervade every aspect of envisioning, defining, designing and implementing solutions in this continually evolving setting. The unbundling of service components and their flexible deployment and execution on-demand to patients and other users will create new challenges for providers.

· Feedback and Response – having an agile structure will enable more responsive delivery models – and capturing data at each point of interaction and each touch point along the Patient Journey enables near-real-time analysis of service delivery, care compliance, and their impact on outcomes. It allows feeding of detailed care experience data back into processes and workflows to enable greater personalization, better communication, and more accurate and effective segmentation for population analytics.

The commitment to an agile operation carries additional demands and benefits that must be considered as part of the transformation strategy:

· De-Coupling – tightly coupled databases, applications, custom code, execution logic and various other technical components can complicate the process of revising or enhancing services and their operation – an agile architecture will mandate an explicit de-coupling and un-bundling of tightly-bound components.

· Rapid Application Development – the technical environment and the operational culture must encourage and enable experimentation – where minimally vetted ideas can be prototyped and evaluated – facilitating an ongoing and in some sense relentless exploration of new areas for improvement or innovation.

· Infrastructure – the cloud explicitly provisions a clearly defined, precisely tuned and proactively managed capacity for services delivery and data access – ready to invoke and activate (or deactivate) as the demand specifically ramps up and down – the responsive and adaptive provisioning of this capacity of computing resources increases both the effectiveness and the efficiency of the business operations and the satisfaction of stakeholders.

· Cloud Orchestration – these unbundling and decoupling features combine to enable and facilitate a more agile operation. The execution model for the primary data sources and system services becomes one of flexible activation and deactivation of cloud-deployed capacities at a more granular level – tuned to the needs and demands of the external users rather than the constraints of internal operations.

6. Security & Access Control – the increased openness of these services demands more rigorous and reliable levels of security – including data security, application security, data encryption, compliance with regulations, and more informative monitoring of the ongoing state of the systems. Threats to on-line computing resources continue to rise as the incidence of hacking, data stealing, and denial of service attacks increases in number and sophistication. Added attention to risk management, strict adherence to appropriate security standards, and regular audits must be part of any such initiative.

The increasing availability of digital technologies is reinforcing expectations of timeliness, flexibility and convenience with patients, care givers, providers and payers in an evolving ecosystem of service delivery and information exchange. The relentless focus on quality and outcomes, cost control, value creation, and satisfaction will continue to drive innovation in service delivery across an expanding and diversifying network of healthcare industry participants. Organizations and individuals that respond and adapt will realize distinct advantages in both clinical and financial performance.