Digital Transformation has become a hot buzzword recently, being adopted by Microsoft as the overarching theme for their cloud based business apps and the subject of many studies from McKinsey and company, Gartner and other research firms.

I wanted to share some of our approach and lessons learned working with companies in different industries such as Insurance and Manufacturing on their digital transformation initiatives.

A transformation does not happen overnight. It is a long and sometimes painful process that to be honest, never really ends. The rate of innovation and change is increasing and new business and customer needs will constantly emerge.

Therefore, our approach is very much grounded in the concepts of agility. The right foundation built with change in mind. In such an approach, it is not always beneficial to try and document every future requirement to see how to accommodate it but to have a very strong foundation and an agile, open framework that can be easily adapted.

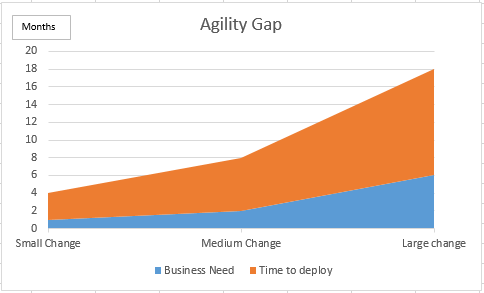

A good way to judge your current agility level is to perform a Digital Agility Gap test. For small, medium size and large changes business has requested in the last year, what is the gap between when the business would like to see the change made to when your organization was able to deploy? The larger the gap, the more acute the need is for a comprehensive digital transformation.

The following 7 core principles should drive every digital transformation initiative, large or small:

- Business Driven. This may sound obvious but all digital initiatives need to have a business reasoning and business sponsor. Technology can be a game changer but very often, the digital channel needs to be part of an omni-channel approach. eCommerce can augment retails stores or distribution channels but will not replace them for a long while. Digital must be part of the overall business and market strategy. The new role of Chief Digital Officer is a great example for how organizations integrate the digital as a business channel with broad responsibilities and a chair at the executive table. The Digital aspect needs to be part of every major organizational strategy, not a separate one. For example: you are launching a new product, how will you design it, support the manufacturing/supply chain, market, sale and support the product using Digital means?

- Data is King. Having enterprise information available in digital format with a single source for the truth is the absolute foundation of a digital transformation. Without “Good data” the effect of garbage in, garbage out will produce inconsistent results and systems people can’t trust. This is usually the hardest part for many companies as organizational data may be residing in many legacy systems and too intimately tied to old business applications. It also is hard work. Hard to understand and hard to put a direct ROI on. It is not glamorous and will not be visible to most people. In lieu of complete data re-architecture, most organizations start with master data management and data warehouse / operational datamarts to get around the limitations of the various systems where data is actually stored. The imperative is to know what the single source of the truth is and abstract the details through data access layer and services. The emerging area of Big Data allows capturing and processing ever larger amounts of data, especially related to customer interactions. Data flows, validation and storage needs to be looked at again with new vision into what and how data is captured, stored, processed and managed.

- Actionable Analytics. Many organizations invested heavily in Business Intelligence and use decision support systems to run analysis and produce reports. The expanding scope of data capture and processing now allows analytics to serve as actionable triggers for real time decisions and other systems. For example, your website’s ability to make customer specific product recommendation can be a result of real time process that conducts a customer analysis and what similar customers have bought and can execute an RFM analysis to assign a tier to the customer and derive relevant offers. Marketing campaigns can target prospects based on predictive analytics etc. Closed loop analysis is critical for understanding the impact of decisions or campaigns. The ability to see the connection between an offer or search campaign and the revenue it generated is the foundation of future investment decisions.

- Customer Centricity. One of the main drivers and benefits of the digital transformation is the ability to meet the new world of customer expectations and needs. Customers want access to information and ability to take action and interact anytime, anyplace, from any device. The new Digital Experience maps to the customer lifecycle, journey or buying flow and data is collected at every point of interaction to feed personalization, targeting and marketing. When done correctly, an intelligent user experience will improve engagement, loyalty and conversion. In designing new digital user experience, we usually recommend mapping the user interactions across all touch points and focusing on finding common needs rather than a “Persona” driven approach. Those in our experience are too generic and lead to oversimplification of the model.

- Agility in Technology and Process. Agility is at the heart of our approach and without it you would go through a transformation every few years. It is broader than just IT and impacts many business and operational processes. Few key concepts of planning for agility:

- De-coupling. A large part of what makes changes hard, is the intertwined nature of most IT environments. Proprietary databases, older applications without outside interfaces, hard coded database calls in code, heavily customized but dated applications, etc. The solution is to de-couple the elements and create a modular, service oriented architecture. Data should be separated from logic, services, and user interaction allowing each tier to grow and evolve without requiring complete system re-write. For example, the biggest driver of transformation in the last few years has been the user experience and the need to support users in various mobile devices. A de-coupled architecture would allow UX overhaul using the same services and backend.

- Agile / Rapid application development. Application development needs to be able to create prototypes and test ideas on a regular basis. For that to happen, the process of definition, design, implementation and testing software has to be more responsive to business needs. Whether following Agile Methodology principles or just a more iterative version of traditional models, application development has to be able to quickly show business users what they would get, and adopt a minimal viable product approach to releasing software. An emerging model of continuous delivery allows faster, automated deployment of software when it is ready.

- Cloud and Infrastructure agility. The emergence of cloud services is making agile environments so much easier to implement. From an infrastructure perspective, you no longer need to invest in hardware resources for your worst-case load scenario. The ability to get just as much computing resources as needed on demand and scale as needed in matter of minutes makes platforms like AWS and Azure very appealing. Many applications now offer only cloud based versions and even the large players like Microsoft and Oracle are now pressuring all customers to get on the cloud versions of their applications. The ability easily to plug a cloud application into the environment is the ideal of agility. With a common security and authentication layer, the modern corporate application landscape is comprised of many different cloud applications being available to each user based on their role and integrated to a degree that makes the user experience as seamless as possible.

- In addition to the environment, software and infrastructure, organizational processes have to be more flexible too. Change management needs to become a process that enables change, not one the stops it.

- Process Automation: with the new landscape comprised of so many different and independent application, process automation and leverages the open interfaces of application is becoming critical. Traditional Business Process Management application are now morphing into cloud orchestration and an ability to allow processes to be created across multiple applications and managed / updated by business users without IT involvement.

- Security. Last but not least, the open, flexible nature of the future landscape we were describing here, requires new levels of security that should be an integral part of all facets of the environment. Data security and encryption. Services security, security in application design, all layers and components have to consider the rising threat of hacking, stealing data and denial of service that are more prevalent than ever. We see this as the primary concern for companies looking to adopt a more digital and agile environment and a large emphasis on risk management, security standards and audits should be a primary component of any digital transformation initiative.

It’s been said that the kitchen is the heart of the home. When it comes to the enterprise (big and small) your company’s production might get done in the shop, but sooner or later, everyone gets fed business processes, which are often cooked in the kitchen of technology. In fact, technology is often so integral to what many companies do nowadays that it’s usually hard to tell where, in your technology stack,

It’s been said that the kitchen is the heart of the home. When it comes to the enterprise (big and small) your company’s production might get done in the shop, but sooner or later, everyone gets fed business processes, which are often cooked in the kitchen of technology. In fact, technology is often so integral to what many companies do nowadays that it’s usually hard to tell where, in your technology stack,